The good news is we can use the Keras API for training deep models on Google Colaboratory TPU. But there are several important things we need to know in order to do so.

- First of all, we need to use Keras only with the TensorFlow backend to run our networks on a Colab TPU using Keras.

- We have to care about the dimensionality of our data. Either the dimension of our data or a batch size must be a multiple of 128 (ideally both) to get maximum performance from the TPU hardware.

- Currently, Google Colab TPU doesn’t support Keras optimizers, so we need to use optimizes only directly from TensorFlow, for example: optimizer=tf.train.AdamOptimizer()

- Finally, right before implementing the Keras fit method, we need to convert our Keras modal specifically for a TPU, using the special method keras_to_tpu_model. The code for this is the following:

After this code, we call the fit method on the tpu_model we just created, for example:

history = tpu_model.fit(x_train, y_train,

batch_size=128*8,

epochs=50)

The rest of the code for neral networks on a TPU using Keras remains basically the same as a code for networks for running on CPUs or GPUs.

In order to be able to use free Google Colaboratory, you need to have the Chrome web-browser, a Google account, and a Google drive account.

To use Google Colab you should go to https://colab.research.google.com

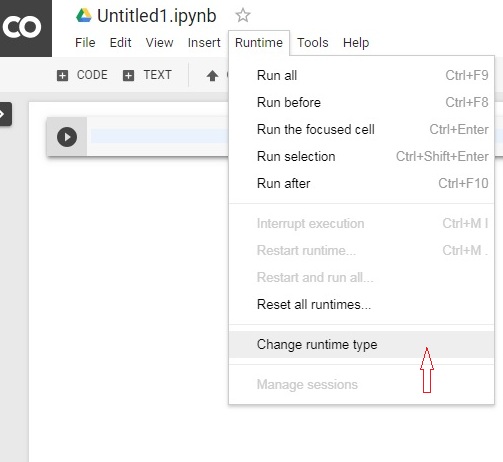

In order to select the TPU mode on Colab you can do the following:

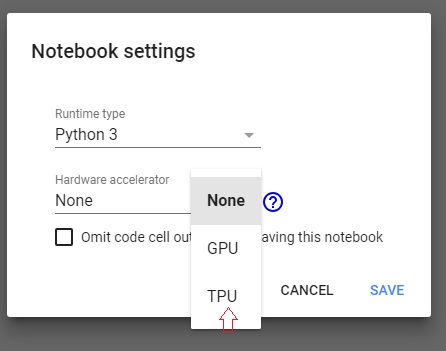

and then:

Note, that we can save a trained TPU model and use it in an inference stage on a CPU or GPU.

TPU belongs to Application-Specific Integrated Circuits (ASICs). As the name suggests, an ASIC is a highly specialized version of integrated circuits. It’s even a more specialized architecture than a GPU. A TPU is an example of an ASIC from Google that is specifically designed for running deep neural networks and some other machine learning models. And it outperforms GPUs in training deep networks.

A TPU is designed to run operations with tensors. Since a forward propagation in neural networks is basically a series of vector-matrix multiplications, a TPU significantly accelerates running deep neural networks.

References for this short tutorial:

https://cloud.google.com/tpu/docs/tpus

https://cloud.google.com/tpu/docs/performance-guide

https://www.youtube.com/watch?v=60xbDEpA49M&t=350s (This video is in Russian)